- Data Center

- Blaize

Boost Enterprise and Edge Data Center Cost-Effectiveness for AI Inferencing

Firms operating enterprise edge data centers are turning to AI inferencing to unlock new insights and drive innovation through investment in new infrastructure tailored for AI workloads. Blaize based solutions are designed to help bring AI to enterprise edge data centers by strategically enabling efficiency and cost-effectiveness. This includes data centers of all types, including small and midsized operations, providing a total cost of ownership (TCO) advantage over alternatives.

Decision makers may be concerned that implementing AI Inferencing and infrastructure upgrades are out of reach and out of budget, with the potential to drive up costs across the balance sheet. One area of concern is OpEx costs associated with AI Inferencing, such as computational power consumption, cooling costs to run facilities, and bandwidth costs incurred from processing and routing data. Another area of concern is CapEx costs, which are interrelated to hardware and infrastructure investments and ongoing maintenance expenses. Blaize solutions offer TCO savings over alternatives, addressing those OpEx and CapEx concerns.

In this blog, we’ll explore why the number of enterprise edge data centers is increasing and illustrate how Blaize is working to help firms realize cost-effective AI Inferencing without breaking the bank.

Why Invest in Data Centers at the Edge?

Enterprise edge data centers have proliferated in recent years, a trend largely thanks to the rise of 5G, the ubiquity of IoT devices in everyday life, and the increased use of video streaming on the web among other factors. A commonality among these is the need to reduce cost and enable low-latency processing which enterprise edge data centers can provide. These data centers are typically smaller to medium-sized facilities situated closer to the primary source of data center traffic, located in densely populated areas, as opposed to massive complexes built far outside city limits. These custom data center solutions are ideal for managing AI workloads and may be housed in a small building or even a server closet located close to the data source and its delivery point.

AI-enabled Enterprise Edge Data Centers are commonly found all around us; for example, in manufacturing facilities, factories, hospitals, traffic management control centers, airports, and small and medium-sized enterprises. Everywhere this is a case for AI to be closer to the source and users of data a nexus where privacy, proximity, efficiency, and latency are considerations. To illustrate how a firm might benefit from implementing an enterprise edge data center, let’s look at one specific example to understand how Blaize based solutions can help.

A retail mall in the United States consisting of more than 100 units, each with stores hosting cameras that analyze customer behavior, early detection of security or safety threats, track inventory levels, measure marketing effectiveness, manage crowd flow, etc. These malls can have upwards of 1,000 cameras using an enterprise edge data center model and want to reduce their total operational and infrastructure costs. This is where Blaize solutions can help.

Blaize Helps Reduce TCO

The primary focus of most data center decision makers is to execute on performance while keeping costs as low as possible. Keeping with our retail example of running 1,000 camera feeds, as AI workloads become more ubiquitous, AI Accelerators are playing a large role in the edge data center model, and finding cost-effective solutions for AI Accelerators is paramount. These factors boil down to energy costs for AI processing, bandwidth, cooling of systems, auxiliary costs for running a facility (i.e. keeping the lights on), and maintenance.

The Blaize® Xplorer® Product Family featured in our previous blog, Blaize® GSP® Technology: Addressing Enterprise and Edge Data Center Market Needs, helps to cost-effectively address these concerns with high-density computing while reducing the required server room footprint for the same amount of work. Blaize® Xplorer® X1600E EDSFF accelerator cards are designed to be installed in off-the-shelf industry standard server appliances. For example, a typical OEM manufactured 1U EDSFF server unit can host up to 24 or 32 cards. This can enable a major increase in compute density compared to alternative hardware platforms and have the potential to deliver up to 2-5x cost savings in hardware expenditure when compared to alternative solutions for the same level of AI inference work. From an operational standpoint, these systems can enhance workload efficiency by averaging 8W per card. Blaize accelerators require near-zero management to handle AI/ML workloads resulting in ultra-efficient CPU usage of less than 1 core out of 32 (as tested on an EPYC 7502) required to manage all cards.

To help illustrate power savings, let’s look at our retail mall example where we are processing 1,000 cameras in their enterprise edge data center operating with a legacy solution.

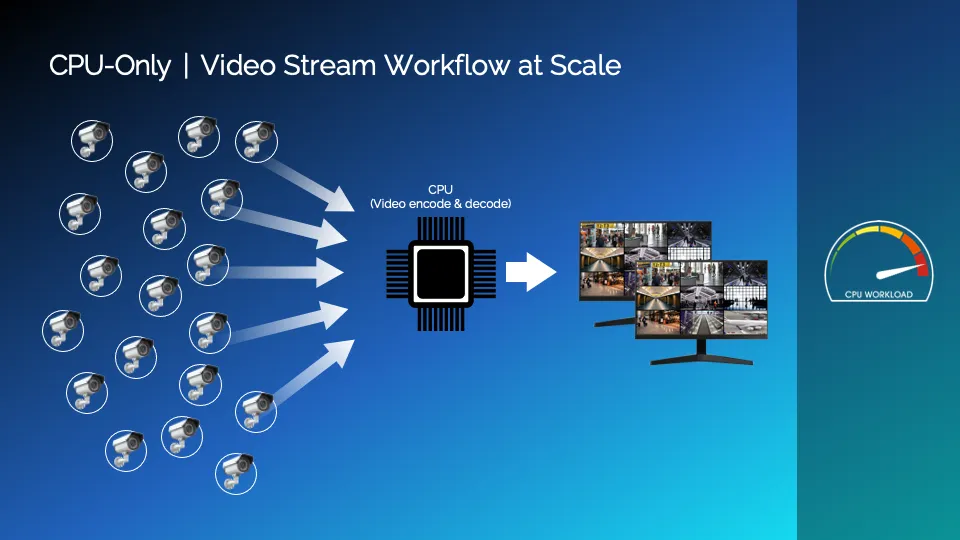

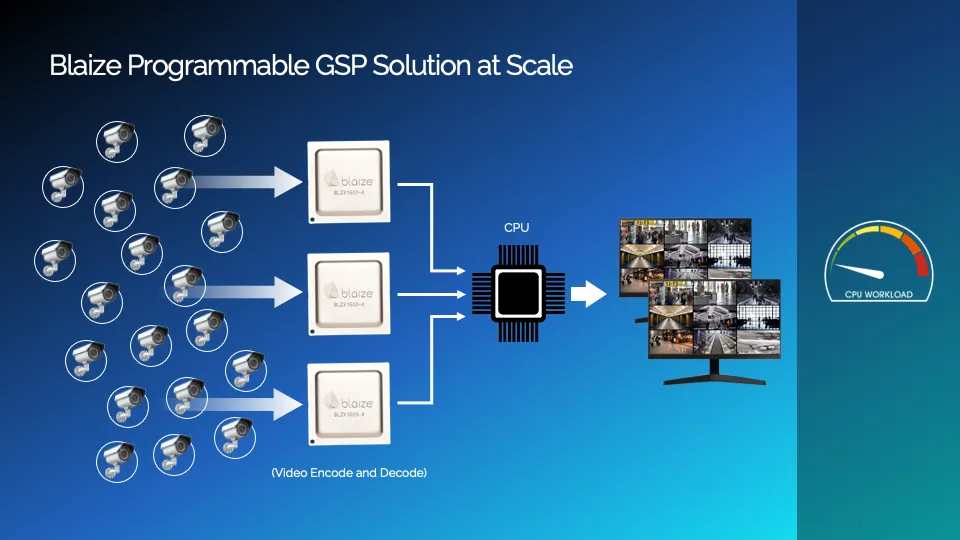

As the camera systems feed into the enterprise edge data center, the CPU may become overwhelmed with video encoding and decoding tasks at scale, leading to delays and increased power consumption. Blaize solves this problem with its programmable GSP accelerators, processing video tasks at the edge without burdening the CPU.

This solution is designed to reduce bottlenecks, reduce latency, decrease power consumption, and enhance overall system efficiency by optimizing the distribution of tasks and resources. Supporting these cameras for video analytics applications with low latency and high throughput compared to legacy hardware solutions, Instead of adding AI capability to each camera, or adding AI boxes to each store, which is costly and complex, all the camera feeds are routed into a centralized unit, one single server can handle 1000s of video streams in parallel, efficiently, doing complex video analytics in real time.

Blaize Delivering on Cost-Effective AI Inferencing for Enterprise Edge Data Centers

The points addressed in this blog show AI inferencing capability can be cost-effective for firms operating enterprise edge data centers, allowing them to harness new insights and drive innovation without overspending. The adoption of edge inference by enterprises is gaining momentum as they recognize its benefits and value. As a result, the cost of inference is becoming a major concern for them, both operationally and from an infrastructure standpoint, highlighting the increasing importance of efficient, AI-tailored infrastructure for enterprise edge data centers. Blaize’s Xplorer Product Family and GSP Technology offer high-density computing in small footprints, significantly reducing the TCO and enhancing system efficiency by offloading video processing tasks from CPUs. This enables OEM manufacturers to build systems and solutions that can deliver inference at the edge efficiently and at a low cost of ownership, resulting in substantial power savings and improved performance, supporting many video streams with low latency and high throughput.

In future blogs, we’ll examine case studies of Blaize GSP Technology in use today in key verticals and showcase how Blaize is helping firms realize cost-savings for TCO when it comes to AI Inferencing without sacrificing results.